ChatGPT: Total game-changer, still no I in AI

Part 2: It's a developer tool, not a developer

Previously, on…

In case you missed it, in Part 1 I explored using ChatGPT to solve a computational problem. Specifically, I was trying to write an algorithm that would produce mathematical equations based on provided input parameters. The final conclusion I came to was that ChatGPT (and similar models) will not be replacing developers any time soon. As soon as you try to get it to produce anything beyond very simple examples with clear logic, it quickly starts to fail.

Realizing this, I shifted my focus to using ChatGPT as a sort of advanced search engine and live debugger. It’s certainly much better at the former, and as my little experiment progressed, I noticed this becoming much more pronounced. Whether this is a result of OpenAI changing how their publicly available model functions, or simply the types of challenges I was throwing at, I cannot say. I will also add that most of the ChatGPT sessions were before January 25th, and in some brief testing in recent days, it does seem less ‘willing’ to output code it has constructed beyond very simple examples.

What’s next?

Back to the project — at this point I had a file generated with all the possible equations with 4 numbers that fit the required parameters and a way to solve those equations based on 4 input numbers. My initial task was complete (I can generate hints from the provided solutions) and I answered my initial question — can ChatGPT write an algorithm? No. So why is there a part 2? Well, I have been tinkering with Cloudflare Workers lately and I wanted to convert the solver/hints part of the project into a web API. I already had the implementation written in python — I would just need to map the values to the variables [a,b,c,d], then eval() the resulting expression. A simple enough task, except for one major snafu — something I remembered reading in the Cloudflare docs.

eval()is not allowed for security reasons.

That certainly makes things a bit more challenging. I asked ChatGPT and it provided a reasonable solution: use the new Function syntax instead. Only one problem with that:

new Functionis not allowed for security reasons.

No good. What other solutions could ChatGPT provide me? This is about where I started to explore using ChatGPT as a developer tool. I used it to explore options and alternatives when encountering roadblocks, to explain syntax I didn’t understand and to produce and explain simple examples that I could then iterate on. Now my question is: will this really improve my workflow or am I still just awe-struck by its eerily sentient responses?

ChatGPT as a tool

I restated the question in a new prompt, and made sure to stipulate that I couldn’t use eval() or new Function and that I didn’t want to use an existing library like math.js. It proceeded to explain how I could parse the equations into something called Reverse Polish Notation (wiki) or postfix using the Shunting-yard algorithm (wiki) and then evaluate the expression using those strings. It also then proceeded to explain how I could use math.js or eval() — but warned me about the security risks involved in using this function. The first part was great! Then it immediately suggested the very things I told it I couldn’t use.

I ended up asking more about RPN and the Shunting-yard algorithm since I wasn’t familiar with these concepts. The explanations were clear and verbose — some of the examples even worked! The full explanation on the algorithm is a little outside the scope of this article, but essentially you iterate over the characters in an expression and use some basic rules to push and pull items from a stack. The contents of the stack will either be the operators (if you’re generating the expressions) or the operands (if you’re evaluating the RPN expressions).

It seemed a bit silly to parse all of these equations into a specific format initially, just to parse them all into a different format later. So I went back to my initial equation generator in python (gist) and added a function to parse the equation into its postfix form and store both.

Here’s the new function:

# will return the RPN (postfix) version

# of an equation string with operands a,b,c,d

# e.g. "a+b-c*d" would return "ab+cd*-"

def to_postfix(expr):

postfix = []

op_stack = list()

for x in expr:

if x in ['a','b','c','d']:

postfix.append(x)

elif x in op_add:

if len(op_stack) and op_stack[-1] != '(':

postfix.append(op_stack.pop())

op_stack.append(x)

elif x in op_prod:

if len(op_stack) and op_stack[-1] in op_prod:

postfix.append(op_stack.pop())

op_stack.append(x)

elif x == '(':

op_stack.append(x)

elif x == ')':

while op_stack[-1] != '(':

postfix.append(op_stack.pop())

op_stack.pop()

while len(op_stack):

postfix.append(op_stack.pop())

return ''.join(postfix)Great! Now I have a postfix version of each equation that I can use for evaluation. It was trivial to write the JS to evaluate the expressions and return the infix (expression before being parsed into postfix) version with the values mapped to the variables a,b,c,d. API achieved! Mostly.

A quick note before we press on: at this point, this whole project has gone so far beyond the original scope of what I was trying to accomplish (algorithm-wise) and has now ventured into pure silliness — no one should ever implement a solution in this fashion. There were multiple times where I had this feeling of trying to push a square peg through a round hole, but the real fun was in seeing where ChatGPT would lead me to try and solve some uncommon (and perhaps unorthodox) problems. So try not to judge this project as a real-world example — this was just the project I chose to test ChatGPT with. I’m here to learn new things, tinker, and above all else, exert my vastly superior analytical power upon a feeble language model.

Now, back to that Cloudflare Worker. It worked, that’s for sure, but it was (unsurprisingly) slow. Thankfully, just because I stubbornly want to implement a serverless API, doesn’t mean I’m limited to JS. Workers also supports WebAssembly — something else I’ve been meaning to try out. I grabbed the emscripten worker template from Github (link) and had ChatGPT whip up an implementation of the algorithm to evaluate postfix expressions in C. It once again came close, but I wasn’t expecting it to nail it. I was able to quickly debug it and make the necessary changes to fix it up. Considering I’m a bit rusty in C, it’s a bit of a toss up if it would have been quicker to just write it myself.

But where I quickly ran into issues was dealing with the large arrays of strings I needed to process, and the large array of strings I would need to return. The vast majority of my C-related experience lately has been with Arduino, which adds some classes to make things a bit simpler. I was getting bogged down in memory management, pointers, string null termination and all of the other joys of C. I understand the higher-level concepts, but I’m not always sure how best to implement them. How do things like knowing the size and structure of my input arrays affect how I should architect my solution?

Knowing that ChatGPT wouldn’t be able to properly grok my problem if I gave it a high-level explanation, I decided to compartmentalize my queries. This is where it really came alive for me. I wasn’t looking for basic explanations, or general use cases, or even best practices — I was looking for the trade-offs of different implementations within a very specific context. If I was trying to learn C from scratch or was unclear on how pointers and references work, there are certainly better resources. In my case, it was able to provide the information I was looking for and then apply it to the context I required. That last part is where the real game-changing aspect lies, at least in the model’s current form. Sure, I would have been able to accomplish the same thing with some searching or if I was in a masochistic mood I could endure the browbeating from the gurus on StackOverflow — and those are better options in many cases — but this is still an incredible leap.

So with some guidance from ChatGPT, I was able to get my equation solver in C working. Now I just need to tie it in with the emscripten template and deploy it… right? Wrong again. I simply couldn’t get this template working for me — it wouldn’t even build. I’ll revisit it again to make sure it wasn’t just me being a dolt, but the template referenced a file that didn’t exist, and I had already wasted enough time on this particular bit of silliness. Time for new silliness.

I wasn’t done with WASM just yet though - I now wanted to explore how quickly I could create something in a language I had never used before. Keeping with the theme of hot new technology trends, I ended up picking a language I hadn’t even seen before: Rust.

Rust to the rescue?

This time I grabbed the Rust worker template (link) which works quite a bit differently than the emscripten one. This employs workers-rs, a Rust SDK for Cloudflare Workers, so you can write the entire worker in Rust.

Back to ChatGPT for the goods — I was going to need a lot of help with this one. I started with my new tried and true method: ask for a simple example with a basic explanation and then have it expand on concepts by asking follow-up questions and providing further context. I’m starting to get a sense for when it’s starting to get bogged-down and loses track of what I’m really asking for.

Generally speaking, for such a simple piece of code, I found Rust pretty easy to follow along. There were some patterns that ChatGPT would output that were new to me, and its explanations were generally adequate. If I did decide to use Rust for a real project in the future, I would seek a proper resource for learning it. But for an instance where I want to quickly prototype something pretty basic, and I only need to understand the specifics that are relevant to my use-case, ChatGPT was invaluable.

By the end, it took less time for me to write a functioning WASM script entirely in Rust — including the REST API — than it did for me to write just the algorithm in C. Maybe that says more about me as a developer, or about Rust as a language, but I didn’t even have to look up a single thing. My only outside references were the workers-rs readme and the template itself. And it worked. And it was fast.

My original idea was to store the solutions in a Workers KV store, so they wouldn’t be repeatedly calculated. I had already whipped up a worker that checked the KV store for the solution and either returned it or passed the request to the WASM worker to solve. I didn’t even end up needing to use it, I just implemented it all in Rust!

Check out the repository here. It contains the original python script to generate the equations, the Cloudflare Workers (the emscripten one doesn’t currently build) and a component in vue.js that serves as a frontend for the API as well as a hint generator. You can checkout a live version of the web component here.

So, did this improve my workflow? Definitely! Maybe? Sometimes.

I did have to spend an inordinate amount of time wrangling ChatGPT to get the type of output I wanted, and it took a bit to get a feel for how best to use it. But let’s remind ourselves: this a very early version of a very new technology. ChatGPT is powerful and at times uncanny, but this isn’t even the limit of the technology in its current state. In the context of software developers, we’re constantly looking for workflow automation and bootstrapping tools to reduce the amount of time we spend doing the boring stuff. Can you imagine a tool that combines the best features from these models and is trained on your own data? The possibilities are boundless! As frightened as I am about the real-world implications of unleashing these new technologies upon the world, this really does feel like a big leap forward.

It’s only a model

I’ve always had this sense that modern computing allows us to create so much, so easily, but everything feels so inaccessible or ephemeral — especially as we produce more and more. The notion that ‘the Internet is forever’ is long gone, things disappear from the digital world all the time now. Most of the data we create isn’t gathered or collected in any meaningful way, and finding value in that data or the context it provides is totally lost. The closest we’ve come to this concept is, unfortunately, all of our personal data that is stored and sold as a commodity. But we’re unable to benefit from that — unless you consider being served targeted ads helpful, and are somehow immune to propaganda. Even in the case of health data collected from smartwatches, we don’t really take ownership of that data and the insights extracted are limited to what the platform provides.

I came across this blog post recently where the author loaded their journal entries from the past 10 years into GPT-3 and asked it questions. Putting aside the ethical concerns surrounding AI therapy for a moment, this concept is the most exciting thing about language models for me. There is less separation between humans and computers in terms of what data we can parse and output. I keep seeing people make the case that these models aren’t truly intelligent, they’re essentially just really good at using bullshit to convince you they understand what they’re outputting. I don’t think the distinction between what a machine understands and what it can parse is all that important right now. We can agree that true AI (or AGI — Artificial General Intelligence) doesn’t exist in these models, and is likely still a long ways out. But to describe ChatGPT as “only a language model” ignores the fact that language is humanity’s oldest and most powerful tool. And now we’re training machines on how to use it, not so they can use it — there is no they — but so that we can use it. And how it will be used by us is simultaneously exciting and terrifying.

With all that in mind, as the big tech companies continue to integrate these new technologies into their products, we ought to be extra vigilant in how they are implementing them. To the public, the inner-workings of these models is extremely opaque, especially when looking at a specific output. Where it got the information and how it was parsed are crucial to understanding the value of its responses. Until we have a better grasp of this, take its output with a very large grain of salt. But in cases where you can make that determination yourself, ChatGPT can be very beneficial.

Wrap it up, already

I haven’t even touched on the climate implications due to the vast computational requirements of these models, but I’ll save the rest of my moral and ethical ranting and raving for another venue.

Overall,1 this project/experiment/excuse-to-play-with-ChatGPT of mine was a fascinating ride. I managed to learn a few new things, I got to experience the true depth of ChatGPT and its capabilities, and I wrote some fun little scripts. Moving forward, I don’t see myself using ChatGPT as a regular resource, but I do find myself using it every now and then when I have a very specific type of question. I expect to see a lot more AI-based developer tools on the horizon — some of them will even be useful. Github’s Co-Pilot (powered by OpenAI’s Codex) and ChatGPT are just the tip of the iceberg. This isn’t Web 3, or NFTs, or any of the other buzzwords of the day — this really is the next big thing.

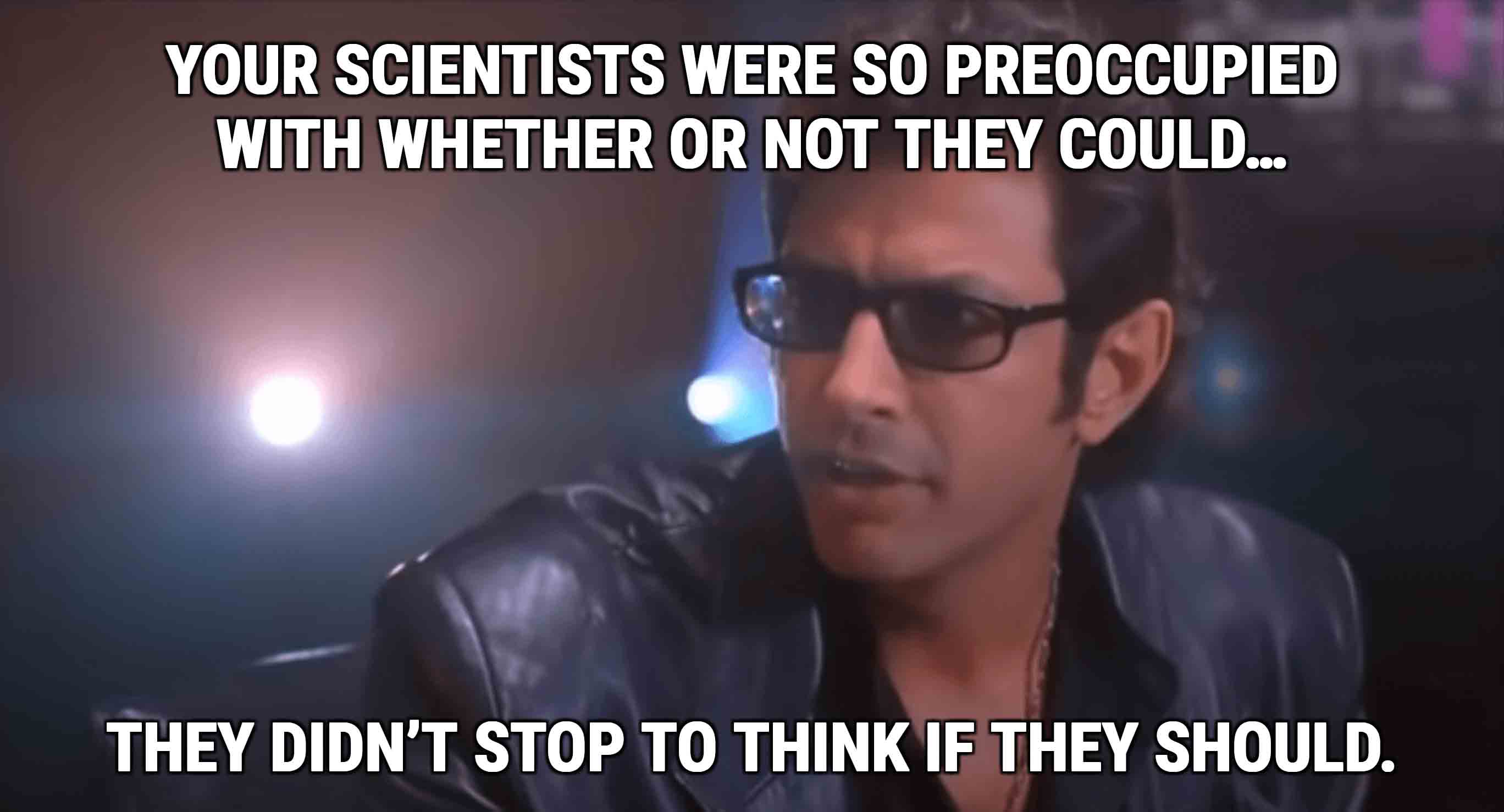

Lastly, for those of you who have forgotten where this all started (like me) — if you take only one thing away from these articles, please let it be this:

Footnotes

-

(Overall, I’ve been hanging out with ChatGPT too much…) ↩